Table of contents

- What you'll need:

- MindAR Background:

- Augmented Reality Overview:

- Tracking Target Images:

- Directory structure:

- index.html Breakdown:

- A NOTE on the p5.js version ⚠️

- Register AFRAME Component

- HTML Canvas Elements

- MindAR and AFRAME tracking

- Final index.html

- Helpful Testing/Debugging Tip ⚠️

- p5 code in the sketch.js file

- p5 Sketch Global Variables Declaration

- Preload Hydra in p5

- p5 Bezier Curve Shape Mask

- p5 Setup

- Implement Custom Shape

- p5 Draw

- Isolate hydra-synth to shapeMask

- Rendering Hydra-Synth Graphics in AR

- Conclusion

- Credits:

I was very fortunate to be one of the recipients of the first Hydra microgrant. I proposed this tutorial which I hope is useful. Thanks!

We're going to combine the Hydra live code visuals javascript library with the Mind-AR-js library and p5.js. This tutorial is beginner-friendly but if you're very advanced with any of the tools we're using then you might find this helpful as one way you could apply your skills to make something for AR.

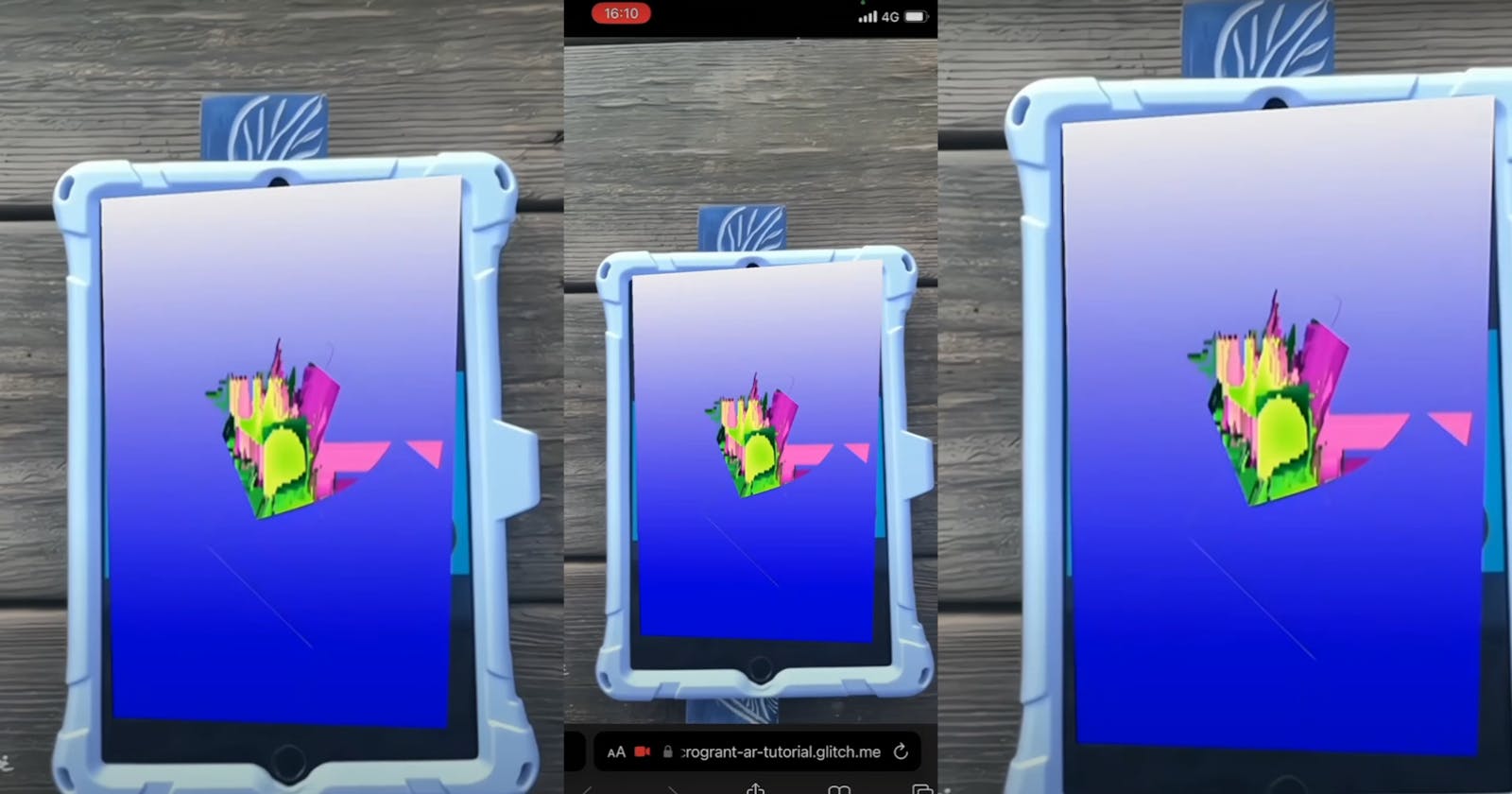

We'll be making this augmented reality poster. It uses MindAR to track an image target which the augmented reality poster appears on top of and follows.

Disclaimer: I'm not a graphic designer but I think this tutorial is a good demo of one way you can use these tools for your art, graphics, games projects etc...

What you'll need:

Target image to track with the AR poster. The documentation for the Mind-AR library explains this process. You can print out the tracking image or display it on another device.

A separate device to display the augmented reality poster. This device needs a camera. I'll use a mobile phone but you can use a mobile phone or tablet. You can also use another laptop or computer with a webcam but it might be awkward to position. You could also print out the tracking image, etc...

Code editor, I'll use glitch in this tutorial.

MindAR Background:

MindAR is an open-source web augmented reality library. It supports Image Tracking and Face Tracking. It was originally written with A-FRAME integration in mind and as of version 1.1.0 it supports direct integration with three.js.

Interestingly, MindAR also uses TensorFlow.js for a WebGL backend and not for training and deploying machine learning models which is what the TensorFlow library was mostly written for.

Augmented Reality Overview:

Because MindAR projects can run in plain static HTML files, integrating Hydra and p5.js is straightforward.

The Image Tracking Quick Start section in the MindAR documentation is the template we'll use in this tutorial but with the addition of hydra and p5.js.

We will build an AR webpage that starts your device's camera.

The camera will detect an image target to track which we specify in code. This tutorial will use the default image from the MindAR docs.

The webpage will show the augmented reality object on top of the image and track it.

Tracking Target Images:

You should read through the guide written by the creator of the MindAR library if you want to use MindAR to track a different image to the default image above which we'll be using from the official documentation.

How to Choose a Good Target Image for Tracking in AR - Part 1/4 - HiuKim Yuen

Then you should follow the 3 steps in the MindAR docs to compile this image into a targets.mind file which we reference in the HTML.

Compile Target Images - MindAR - Documentation

Directory structure:

This is how the files are structured for this project.

hydra-mindAR-poster/

├─ sketch.js

├─ index.html

├─ README.md

index.html Breakdown:

in the head tag of the index.html file, load the scripts for the libraries we'll need to make AR posters with Hydra and p5.js.

<!-- hydra-mindAR-poster/index.html -->

<html>

<head>

<meta charset="utf-8" />

<meta name="viewport" content="width=device-width, initial-scale=1" />

<title>AR_Hydra_Tutorial</title>

<!-- load AFRAME + MindAR + p5.js + hydra-synth + your sketch -->

<script src="https://aframe.io/releases/1.3.0/aframe.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/mind-ar@1.2.0/dist/mindar-image-aframe.prod.js"></script>

<!-- oficial p5 -->

<script src="https://cdnjs.cloudflare.com/ajax/libs/p5.js/1.7.0/p5.min.js"></script>

<!-- unoficial p5 -->

<!-- <script src="https://cdn.statically.io/gh/museumhotel/hydra_mg_tutorial/main/libs/p5.min.js"></script> -->

<script src="https://unpkg.com/hydra-synth"></script>

<script src="sketch.js"></script>

<!-- ... -->

A NOTE on the p5.js version ⚠️

This tutorial is a bit hacky in how it uses the p5.js library. To follow this tutorial without issues use the p5.js library in the index.html head tag.

We're using a modified p5.js v1.5.0 October 18, 2022. If you look at the file, I've left a comment to show where the p5 library's code has been modified.

An earlier version of this tutorial used an unofficial modification of the p5 source code. As of p5.js version v1.7.0, this modification has been merged as an update to p5.js and is officially supported.

The update allows you to render a createGraphics() call onto an existing HTML <canvas> element. We'll cover this when writing the javascript code.

If you'd like an alternative example of p5.js integration with hydra-synth and a different AR library- please refer to this example using the A-Frame P5 AR library created by Craig Kapp, it tracks a QR code instead of tracking an image.

Register AFRAME Component

In the same html head element tag, include this javascript. Before you can use the AR functionality of MindAR-enabled through a-frame.js you need to register an AFRAME component and declare dependencies.

Then call the tick() method. The tick method is useful if your poster will be animated which this one is.

hydra-mindAR-poster/index.html

...

<script type="text/javascript">

AFRAME.registerComponent("canvas-updater", {

dependencies: ["geometry", "material"],

tick: function () {

let material,

el = this.el;

material = el.getObject3D("mesh").material;

if (!material.map) {

return;

}

material.map.needsUpdate = true;

},

});

</script>

...

HTML Canvas Elements

In the body, declare a separate canvas for the p5 sketch and a separate canvas to render the hydra visuals. We'll set the CSS visibility property to hidden on the hydra canvas. If you don't hide the id="hydra_canvas" it will render on the top left on top of the p5 sketch, may be useful for debugging but I've hidden it in the code below.

It's important to set up a canvas for hydra here ahead of time. It serves as a canvas tag with an id="hydra_canvas" that can be grabbed by p5 to extract the hydra texture.

hydra-mindAR-poster/index.html

...

<body>

<!-- canvas containing the p5.js poster graphics -->

<canvas id="canvas"></canvas>

<!-- hydra visualization canvas -->

<canvas id="hydra_canvas" style="visibility: hidden"></canvas>

...

MindAR and AFRAME tracking

Finally add the elements that will render the AR scene. The MindAR library comes with an extension of AFRAME that allows you to construct a 3D scene easily.

You should read the MindAR documentation in the section for building the html page but I've included the most relevant explanations here for the elements of the AR poster.

Within

<a-scene>you can see a propertymindar-image="imageTargetSrc:https://cdn.glitch.global/ea61c5ab-26cf-45ec-9524-2f17ab8c295f/mind_test_target.mind?v=1688944051226; filterMinCF:0.0005; filterBeta: 0.1;". Themindar-image="imageTargetSrc:"property tells the AR engine the location of the compiled.mindfile. The Tracking Target Images section above produces the .mind file. It contains image information to recognise when the tracking image is detected by the device's camera feed. The AR poster will appear on top of this.There is an

<a-entity>element with amindar-image-target="targetIndex: 0"property. This tells the engine to detect and track a particular image target. ThetargetIndexis always0, if yourtargets.mindcontains only a single image. MindAR can compile and track multiple image targets but this is beyond this tutorial.The

<a-plane>element is the object we want to show on top of the target image. This tutorial is for a poster so we'll use a 3D plane but AFRAME has other geometric primitives as well.The

material="src:#canvas"property and thecanvas-updaterreference within the<a-plane>link the plane geometry to the AFRAME component we registered earlier. Thetickmethod we called in the last<script>tag will update every render frame of what you define in thematerial=""property as an AFRAME canvas texture. The AR poster's graphics are defined in our p5.js sketch which will render onto the html<canvas>element with theid="canvas".

<!-- MindAR within AFRAME a-scene element-->

<a-scene

mindar-image="imageTargetSrc: https://cdn.glitch.global/ea61c5ab-26cf-45ec-9524-2f17ab8c295f/mind_test_target.mind?v=1688944051226; filterMinCF:0.0005; filterBeta: 0.1;"

vr-mode-ui="enabled: false"

device-orientation-permission-ui="enabled: false"

>

<a-camera position="0 0 0" look-controls="enabled: false"></a-camera>

<a-entity id="example-target" mindar-image-target="targetIndex: 0">

<a-plane

position="0 0 0"

scale="1.05 1.05 1.05 "

height="1.4145"

width="1"

rotation="0 0 0"

material="src:#canvas"

canvas-updater

></a-plane>

</a-entity>

</a-scene>

</body>

Final index.html

If you're following this tutorial your index.html file should look like this:

hydra-mindAR-poster/index.html

<html>

<head>

<meta charset="utf-8" />

<meta name="viewport" content="width=device-width, initial-scale=1" />

<title>AR_Hydra_Tutorial</title>

<!-- load AFRAME + MindAR + p5.js + hydra-synth + your sketch -->

<script src="https://aframe.io/releases/1.3.0/aframe.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/mind-ar@1.2.0/dist/mindar-image-aframe.prod.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/p5.js/1.7.0/p5.min.js"></script>

<script src="https://unpkg.com/hydra-synth"></script>

<script src="sketch.js"></script>

<!-- Register "canvas-updater" AFRAME component and call the tick method -->

<script type="text/javascript">

AFRAME.registerComponent("canvas-updater", {

dependencies: ["geometry", "material"],

tick: function () {

let material,

el = this.el;

material = el.getObject3D("mesh").material;

if (!material.map) {

return;

}

material.map.needsUpdate = true;

},

});

</script>

</head>

<body>

<!-- canvas containing the p5.js poster graphics -->

<canvas id="canvas"></canvas>

<!-- hydra visualisation canvas hidden -->

<canvas id="hydra_canvas" style="visibility: hidden"></canvas>

<!-- MindAR within AFRAME a-scene element-->

<a-scene

mindar-image="imageTargetSrc: https://cdn.glitch.global/ea61c5ab-26cf-45ec-9524-2f17ab8c295f/mind_test_target.mind?v=1688944051226; filterMinCF:0.0005; filterBeta: 0.1;"

vr-mode-ui="enabled: false"

device-orientation-permission-ui="enabled: false"

>

<a-camera position="0 0 0" look-controls="enabled: false"></a-camera>

<a-entity id="example-target" mindar-image-target="targetIndex: 0">

<a-plane

position="0 0 0"

scale="1.05 1.05 1.05"

height="1.4145"

width="1"

rotation="0 0 0"

material="src:#canvas"

canvas-updater

></a-plane>

</a-entity>

</a-scene>

</body>

</html>

Helpful Testing/Debugging Tip ⚠️

To test out or debug the p5.js code without enabling any of the AR functionality, you can comment out everything in between the <a-scene>...</a-scene> tags. If you're using the online glitch editor, highlight the code and hit ctrl/cmd + / to comment it out.

p5 code in the sketch.js file

This tutorial won't focus too much on the p5.js graphics. To make it clear which code is for p5.js and which code is for the Hydra graphics I've written the p5 code in instance mode rather than global mode.

p5 Sketch Global Variables Declaration

Declare global variables before the p5 preload and setup functions. Here we declare a string template literal for the poster's text and variables for the Hydra graphics, the custom shape we'll apply the hydra graphics onto and time variables.

//hydra-mindAR-poster/sketch.js

//new p5 instance mode instance

let p5Sketch = new p5((p) => {

//poster variables

let posterLayer,

txts,

posterTxt = {

string: `Hydra Microgrant 2022 AR Poster

I proposed a tutorial to create an augmented reality poster that uses the hydra-synth live coding video synth engine within javascript projects.

This poster is the result of the tutorial, hopefully it's helpful and shows some of what is possible when you need an augmented reality poster.

The other javascript dependencies in this project are p5.js and MindAR.

It is based on a project by artist and educator Ted Davis.

Github repo:

https://t.ly/uY5Fk

Instagram demo:

https://t.ly/4wFaZ

This is the original format.

Format

Print: 895 × 1280 mm, F4 (Weltformat)

Screen: 895 × 1280px

I've previously implemented AR into p5.js with hydra-synth using the A-Frame P5 AR library by Craig Kapp.

Github repo:

https://t.ly/RmbuC

A-Frame P5 AR Library:

https://t.ly/af0iO

Date: ${p.day()}/${p.month()}/${p.year()}

Time: ${p.hour()}:${p.minute()}:${p.second()}

To create a QR code to track your AR graphics you could use this one:

https://www.qrcode-monkey.com/

`,

};

//variables for hydra sketch

let h1;

let hydraCG;

let hydraImg;

let hydraCanvas;

//custom shape variables

let shapeMask;

let shapeVerts = [];

const numPoints = 20;

let centerPoint;

//global time variables

let seconds;

let refreshRate = 25;

let showBG = false;

//...

Preload Hydra in p5

Because we are using p5.js within an anonymous instance, you'll see that (p) is how we access the p5.js methods. To use the preload() function so we can initialise hydra we place it inside p.preload = () => {}.

You can find other ways to initialise hydra in the hydra-synth documentation but for this tutorial we will also access hydra-synth in non-global mode.

//hydra-mindAR-poster/sketch.js

//...

p.preload = () => {

//instantiate hydra first

h1 = new Hydra({

canvas: document.getElementById("hydra_canvas"),

detectAudio: false,

makeGlobal: false,

}).synth;

};

//...

p5 Bezier Curve Shape Mask

The hydra-synth graphics on the AR poster will only appear on this moving custom shape written in p5. You can use other shapes or render your Hydra graphics on the entire canvas but this tutorial will show you how to isolate the Hydra graphics to a shape.

As mentioned previously this tutorial won't cover how to make the shape but it's just an experiment with the p5 bezierVertex() method. Here it is in a codepen p5 sketch just so you can see the shape and its movement in isolation. To learn more about curves and procedural shapes in p5 look here and here.

p5 Setup

Create the p5 canvas and set the dimensions. Then assign the p5 createGraphics() buffers which will render onto the AR poster and display the hydra-synth graphics onto our shape.

All of the calls to createGraphics() in the p.setup = () => {} are set to the same size as the canvas of the sketch to maintain consistency when using co-ordinates and with positioning graphics across the different createGraphics() instances throughout the sketch.

//hydra-mindAR-poster/sketch.js

//...

p.setup = () => {

p.frameRate(refreshRate);

p.pixelDensity(1);

p.createCanvas(895, 1280);

//p.createCanvas(p.min(p.windowWidth), p.min(p.windowHeight));

//To render hydra graphics onto then access within p5 as an image later.

hydraCG = p.createGraphics(p.width, p.height);

//To define the custom shape we'll isolate the hydra graphics to appear restricted within.

shapeMask = p.createGraphics(p.width, p.height);

//To render all the graphics onto the AR poster.

posterLayer = p.createGraphics(

p.width,

p.height,

p.P2D,

document.getElementById("canvas")

);

//Split poster text by paragraph.

txts = posterTxt.string.split("\n\n");

//Change from the p5 defaults.

p.rectMode(p.CENTER);

p.textAlign(p.CENTER);

initialiseCustomShape();

};

//...

Notice how the last argument in the posterLayer = p.createGraphics(...) instance is a call to document.getElementById("canvas"). This isolates the code generated by this createGraphics to only render onto the element we defined with the id="canvas" in the index.html when we render the posterLayer using the p5 image() function later.

Implement Custom Shape

Below is a code snippet showing the functions for the shape we will render hydra-synth onto, it's the same shape made of bezier curves from the codepen demo above but refactored for instance mode and the shapeMask. p5Graphics object.

In the sections after you can see one way you can isolate the hydra-syth graphics onto this custom shape.

//hydra-mindAR-poster/sketch.js

//...

// Initialise the custom shape's points with number defined in the numPoints variable.

function initialiseCustomShape() {

for (let i = 0; i < numPoints; i++) {

shapeVerts.push(createPoint());

}

}

// Create a point with random co-ords then adjust.

function createPoint(tx, ty) {

return {

x: tx || p.random(shapeMask.width),

y: ty || p.random(shapeMask.height),

adjust(d) {

if (d >= shapeMask.width) {

//calculateCenter();

this.adjustX();

this.adjustY();

}

},

adjustX() {

this.x =

p.abs(p.sin(p.frameCount * 0.01)) * p.random(shapeMask.width * 1.5);

},

adjustY() {

this.y =

p.abs(p.sin(p.frameCount * 0.01)) * p.random(shapeMask.height * 1.5);

},

};

}

// Calculate the center point of the custom shape.

function calculateCenter() {

let count = 0;

let totalX = 0;

let totalY = 0;

for (let i = 0; i < shapeVerts.length; i++) {

let pt = shapeVerts[i];

count++;

let shapeScale = 0;

let ang = p.noise(pt.x * shapeScale, pt.y * shapeScale, 0.75) * 0.02;

let off =

p.noise(pt.x * shapeScale, pt.y * shapeScale, 0.75) * p.random(25, 50); //speed of movement of the shape

// Update the totalX and totalY values based on the noise-generated movement.

totalX += pt.x +=

p.cos(ang / 2) *

off *

p.noise(0.1) *

p.abs(p.sin(p.frameCount % 0.0001)) *

shapeMask.width;

totalY += pt.y +=

p.sin(ang / 2) *

off *

p.noise(0.1) *

p.abs(p.sin(p.frameCount % 0.0001)) *

shapeMask.height;

}

// Return the average position of the shape's vertices.

return shapeMask.createVector(totalX / count, totalY / count);

}

// Draw the custom shape by connecting vertices with bezier curves.

function drawCustomShape(points, numVertices) {

shapeMask.beginShape();

for (let i = 0; i < numVertices; i++) {

if (i === 0) {

shapeMask.vertex(points[(i.x, i.y)]);

} else {

bezierVertexP(points[i], points[i], points[i + 1]);

i += 2; // Skip two points for the bezierVertex

}

}

shapeMask.endShape();

}

// Define a custom function for the bezierVertex.

function bezierVertexP(a, b, c) {

shapeMask.bezierVertex(a.x, a.y, b.x, b.y, c.x, c.y);

}

function drawGlasses(centerPoint) {

p.push();

shapeMask.stroke(255);

shapeMask.fill(255);

// Draw glasses frames

shapeMask.rect(centerPoint.x + 50, centerPoint.y, 50, 20);

shapeMask.rect(centerPoint.x - 50, centerPoint.y, 50, 20);

shapeMask.noStroke();

shapeMask.fill(255);

// Draw glasses arms

shapeMask.rect(centerPoint.x + 50, centerPoint.y, 2.5);

shapeMask.rect(centerPoint.x - 50, centerPoint.y, 2.5);

// Draw glasses bridge

shapeMask.stroke(255);

shapeMask.line(

centerPoint.x - 25,

centerPoint.y + 10,

centerPoint.x + 50,

centerPoint.y + 10

);

p.pop();

}

// Adjust the positions of custom shape points based on distance from a random point.

function adjustCustomShapePoints() {

for (let i = 0; i < shapeVerts.length; i++) {

let pt = shapeVerts[i];

let d = p.dist(pt.x, pt.y, p.random(p.width), p.random(p.height));

pt.adjust(d); // Adjust the point's position

}

}

//...

p5 Draw

In the p5 draw loop is where this tutorial will call a function to display the background, position the shape to display hydra, render hydra, mask hydra to appear only on the shape in AR and animate the text. The last thing we'll do in the draw function is assign the posterLayer as the dominant graphics on the canvas in AR.

//hydra-mindAR-poster/sketch.js

//...

p.draw = () => {

p.randomSeed(2);

centerPoint = calculateCenter();

seconds = p.frameCount / refreshRate;

runPosterBg();

renderHydra();

selectHydraGraphicsMaskShape();

animateText();

//render posterLayer onto the default p.createCanvas() from p.setup

posterLayer.image(

p.get(0, 0, p.width, p.height),

0,

0,

posterLayer.width,

posterLayer.height

);

};

// The background for the poster using p5

function runPosterBg() {

// Add a gradient background

for (let i = 0; i <= posterLayer.height; i++) {

let interpolate = p.map(

i,

0,

p.height / (p.sin(p.frameCount * 0.025) * 2),

0,

1

);

let rVal = p.abs(p.sin(p.frameCount * 0.01) * 0);

let gVal = 0;

// Interpolate the colour between

let lerpC = p.lerpColor(

p.color(p.abs(p.sin(p.frameCount * 0.1) * 255), 255),

p.color(rVal, gVal, p.abs(p.sin(p.frameCount * 0.01) * 255)),

interpolate

);

posterLayer.stroke(lerpC);

posterLayer.rect(

-posterLayer.width / (p.sin(p.frameCount * 0.01) * 2),

i,

posterLayer.width * 1.5,

i / 2

);

posterLayer.rect(

-posterLayer.width / (p.sin(p.frameCount * 0.01) * 2),

-i,

posterLayer.width * 1.5,

-i / 2

);

}

}

function animateText() {

for (let i = 0; i < txts.length; i++) {

let y =

p.map(i, 0, txts.length, 0, p.height) +

p.sin(i * 50 + p.frameCount * 0.02) * p.random(0, p.height);

let x =

p.random(0, p.width/2) -

((p.frameCount * p.random(0.5, 5) + p.random(p.width)) % p.width) /

0.2;

posterLayer.fill(255);

posterLayer.stroke(p.abs(p.sin(p.frameCount * 0.1)) * 128);

posterLayer.strokeWeight(5.0);

posterLayer.textFont("monospace");

posterLayer.textSize(p.abs(p.sin(p.frameCount * 0.01) * 75)); //oscillate txt size w/ sin function

posterLayer.text(txts[i], x, x);

}

}

});

Keep in mind that the background being generated by the runPosterBg() won't show if you are debugging or testing the sketch and you have turned off the camera and AR in the index.html by commenting out the code contained in the <a-scene> element. This is the background running in isolation in p5, ideally it should be written in a shader for what it is but in this tutorial we'll just demo it as a background.

Isolate hydra-synth to shapeMask

This section is important. The order you call this function in the p5.draw() loop will determine what hydra-synth graphics you'll see in the AR poster.

//hydra-mindAR-poster/sketch.js

//...

function selectHydraGraphicsMaskShape() {

shapeMask.clear();

hydraCG.clear();

p.clear();

//the main shape will be filled with hydra

drawCustomShape(shapeVerts, 10);

//second shape outline only no fill

p.push();

shapeMask.noFill();

shapeMask.strokeCap(p.SQUARE);

shapeMask.strokeWeight(2.5);

shapeMask.stroke(

255,

p.abs(p.sin(p.frameCount * 0.01)) * p.random(90, 100)

);

//Call the custom shape function again using remaining elements from shapeVerts array

drawCustomShape(shapeVerts.slice(10), shapeVerts.length - 10);

p.pop();

//Additional custom shape functions to animate the shape

drawGlasses(centerPoint);

adjustCustomShapePoints();

//Store what is being rendered on the canvas with id="hydra_canvas" in hydraImg variable

hydraImg = hydraCG.select("#hydra_canvas");

//Draw hydraImg onto the hydraCG instance

hydraCG.image(hydraImg, 0, 0, shapeMask.width, shapeMask.height);

//Reassign the hydraImg variable into a p5 image object rendering from hydraCG

hydraImg = p.createImage(hydraCG.width, hydraCG.height);

//Copy the contents of hydraCG to hydraImg

hydraImg.copy(hydraCG, 0, 0, p.width, p.height, 0, 0, p.width, p.height);

//Apply a mask of hydra to only render on the bezier custom shape defined in shapeMask#

hydraImg.mask(shapeMask);

//Display the masked hydraImg on the main canvas

p.image(hydraImg, 0, 0);

}

//...

This list breaks down the comments in the code explaining how to mask live graphics from hydra-synth to a shape defined in a separate p5 graphics buffer.

In the selectHydraGraphicsMaskShape(){} function:

The

shapeMask,hydraCG(hydra graphics) and the main canvas are cleared to prepare for new content.The main shape of the poster is drawn using the custom vertices defined in the

initializeCustomShapefunction. This shape is created with the first 10 vertices from theshapeVertsarray like thisdrawCustomShape(shapeVerts, 10);.You can use the p5 push() and pop() methods to call a second instance of the shape. This tutorial created 20 values to store in the

shapeVerts[]array as coordinates for a shape. The second time we call thedrawCustomShape()function we use the remaining 10 elements from theshapeVerts[]arraydrawCustomShape(shapeVerts.slice(10), shapeVerts.length - 10);. This generates a second shape made of different vertex() points connected by bezierVertex() curves but this time draws and animates only the outline of the shape since we declaredshapeMask.noFill();within the push and pop statements.The function

drawGlasses()is called to draw glasses positioned relative to thecenterPointof the custom shape. This shape will also render the same hydra-synth graphics.The function

adjustCustomShapePointsis called to adjust the positions of custom shape points based on their distance from a random point, this animates the shape.The hydra-synth graphics running on the

<canvas>withid="hydra_canvas"are assigned to the thehydraImgvariable.hydraImg = hydraCG.select("#hydra_canvas");Specify that you want the contents of the

shapeMaskp5 graphics object to be drawn tohydraCGas an image() withhydraCG.image(hydraImg, 0, 0, shapeMask.width, shapeMask.height);.Use p5's createImage() method to reassign the

hydraImgvariable into a p5 image object of the graphics being drawn on thehydraCGgraphics object. This now enables the use of methods reserved for p5.Image objects.Because

hydraImgis now a p5.Image, you can use the p5 copy() method to copy the contents ofhydraCGontohydraImg. The destination dimensions should make use of the entire canvas. Resulting in this linehydraImg.copy(hydraCG, 0, 0, p.width, p.height, 0, 0, p.width, p.height);Use the p5 mask() method from the

hydraImggraphics instance to isolate the hydra-synth graphics so that they only render on the shapes defined inshapeMaskp5 graphics object. Remember thatshapeMaskis where we determined all the code for the custom bezier shape to render to but now we can isolate graphics from hydra-synth to just the shapes fromshapeMask.hydraImg.mask(shapeMask);Finally, the masked

hydraImgis displayed on the canvas usingp.image(hydraImg, 0, 0);.

This process combines the hydra-synth graphics with the custom shape and displays the result. To visualise the result on the posterLayer we need to look back at the arguments being passed in the posterLayer.image() call from within the p5 draw loop.

//hydra-mindAR-poster/sketch.js

//...

p.draw = () => {

p.randomSeed(2);

centerPoint = calculateCenter();

seconds = p.frameCount / refreshRate;

runPosterBg();

renderHydra();

selectHydraGraphicsMaskShape();

animateText();

//render posterLayer onto the default p.createCanvas() from p.setup

posterLayer.image(

p.get(0, 0, p.width, p.height),

0,

0,

posterLayer.width,

posterLayer.height

);

};

//...

Use the p5 get() method in the first argument but call it from the instance of the default canvas, e.g. p.get(0, 0, p.width, p.height), . Calling this from the posterLayer instance grabs the contents of all the shape masking and renders it to the AR poster layer.

//...

//render posterLayer onto the default p.createCanvas() from p.setup

posterLayer.image(

p.get(0, 0, p.width, p.height),

0,

0,

posterLayer.width,

posterLayer.height

);

};

//...

Rendering Hydra-Synth Graphics in AR

This final section covers using hydra-synth with the MindAR library and p5.js. Every 10 seconds the poster will switch between two different hydra sketches depending on if the showBG boolean is true or false.

The p5 blendMode() also alternates between the default blend mode, BLEND and the DIFFERENCE blend mode. The change of blend modes in p5 can create interesting visual interactions with a library like Hydra so you should experiment.

//hydra-mindAR-poster/sketch.js

//...

function renderHydra() {

//Alternate between hydra sketches based on time

if (seconds % 10 == 0) {

// Check if 10 seconds have passed

showBG = !showBG;

}

if (showBG) {

posterLayer.blendMode(p.DIFFERENCE); // interesting interaction with the p5.js difference blend mode and hydra sketch

//Define a custom shader written in GLSL to run in hydra

h1.setFunction({

name: "modulateSR",

type: "combineCoord",

inputs: [

{

type: "float",

name: "multiple",

default: 1,

},

{

type: "float",

name: "offset",

default: 1,

},

{

type: "float",

name: "rotateMultiple",

default: 1,

},

{

type: "float",

name: "rotateOffset",

default: 1,

},

],

glsl: ` vec2 xy = _st - vec2(0.5);

float angle = rotateOffset + _c0.z * rotateMultiple;

xy = mat2(cos(angle),-sin(angle), sin(angle),cos(angle))*xy;

xy*=(1.0/vec2(offset + multiple*_c0.r, offset + multiple*_c0.g));

xy+=vec2(0.5);

return xy;`,

});

h1.osc(60, 0.01)

//Call the custom shader function like you would any other hydra source

.modulateSR(h1.noise(3).luma(0.5, 0.075), 1, 1, Math.PI / 2) //luma better than thresh no jagg edges

.out(h1.o0);

}

if (!showBG) {

posterLayer.blendMode(p.BLEND); //The default blend mode

h1.src(h1.o0)

//Generate visual feedback in hydra-synth

.modulateHue(h1.src(h1.o0).scale(1.1), 1)

.layer(

h1

.solid(0, 0, 0.75)

.invert()

.diff(h1.osc(5, 0.5, 5))

.modulatePixelate(h1.noise(5), 2)

.luma(0.005, 0)

.mask(h1.shape(4, 0.125, 0.01).modulate(h1.osc([99, 5])))

)

.out(h1.o0);

}

}

//...

When the posterLayer.blendMode(p.DIFFERENCE); we use the hydra setFunction() method to define custom GLSL shader code and then we invoke it together with the native hydra graphics sources within the hydra sketch.

This tutorial uses the modulating Scale and Rotate example of setFunction from the hydra-book. It's interesting to explore fractals and other visual phenomena that hydra is good for easily generating and controlling.

I like this YouTube video where towards the end emptyflash demonstrates how they use Hydra's setFunction method:

The second Hydra sketch generates visual feedback using the example from the interactive Hydra functions documentation using a slightly modified version of the modulateHue() example.

This video series from Naoto Hieda is also a good introduction to feedback in Hydra:

Conclusion

Thanks for reading if you've followed the tutorial, hopefully, it's useful for implementing augmented reality interaction with posters, artworks, games etc...

Preview on Glitch here.

Clone the project from GitHub here.

It's quite intensive on the device but in the image above I found it interesting to use the webcam feed from the computer into a Hydra sketch running from the MindAR library as well.

Credits:

I give full credit to artist and educator Ted Davis for the original code of this tutorial and the IG demonstration which inspired me to first try combining Hydra using the A-Frame P5 AR library written by Craig Kapp. I want to thank the creator of the MindAR JavaScript library for the tool and the helpful documentation.

I also thank the judges who selected my proposal for the Hydra microgrant: Olivia Jack, Antonio Roberts and Monrhea Carter.

This tutorial was made possible from the support of open source projects so support open source if you can!